OpenAI Whisper - robots with human-level speech recognition skills?

September 30, 2022 – Tommi Holmgren, VP of Solutions

You’ve probably heard or read about OpenAI by now. They have been busy releasing AI models that push the envelope in content generation, natural language, code generation, visual classification and, most recently speech recognition.

OpenAI Whisper promises to “approach human level robustness and accuracy on English speech recognition.” The neural net in question is publicly available as a Git repository, so what could be better than giving our robots human-level skills in English? Scary, maybe, but let me assure you that as a result of this experiment, the robots did not take over the world.

This fun experiment highlights some game-changing features of the Robocorp Gen2 RPA platform.

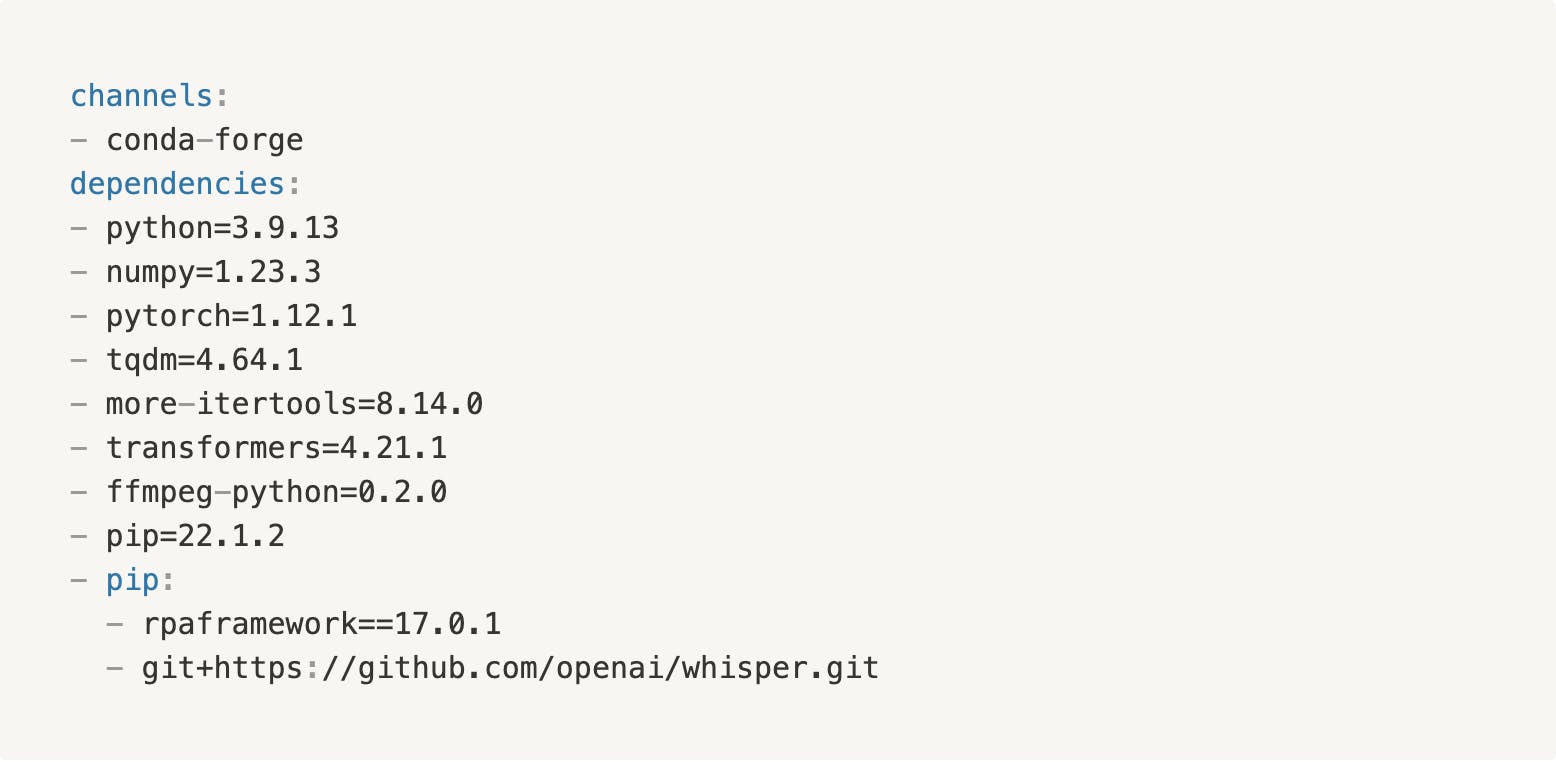

First, the way the environments are managed makes it extremely easy to add new dependencies to your robots, even from outside of the traditional conda-forge and pip sources. And most importantly, orchestrate the bots in any environment reliably and without the manual setup of these libraries.

Second, extending the capabilities of Robot Framework’s built-in keyword libraries with “anything Python” is a simple task. You’ll see how.

Setting things up

Starting with a basic Robot Framework template in VS Code (with Robocorp Code and Robot Framework Language Server installed), the first step is to get the dependencies in place. OpenAI’s repository with examples walks through the installation, and those components need to be in place in your robot’s conda.yaml file. Here’s what you need.

The last line is where greatness happens. You can simply add a public git repository under pip, and ta-da, the library gets added to your environment.

Notice that the setup here was only tested on Linux. For Windows, you’ll need to find another source for pytorch as it’s not available from conda-forge. For Mac, you’ll need to sort out the right libraries for either Intel or Apple silicon versions.

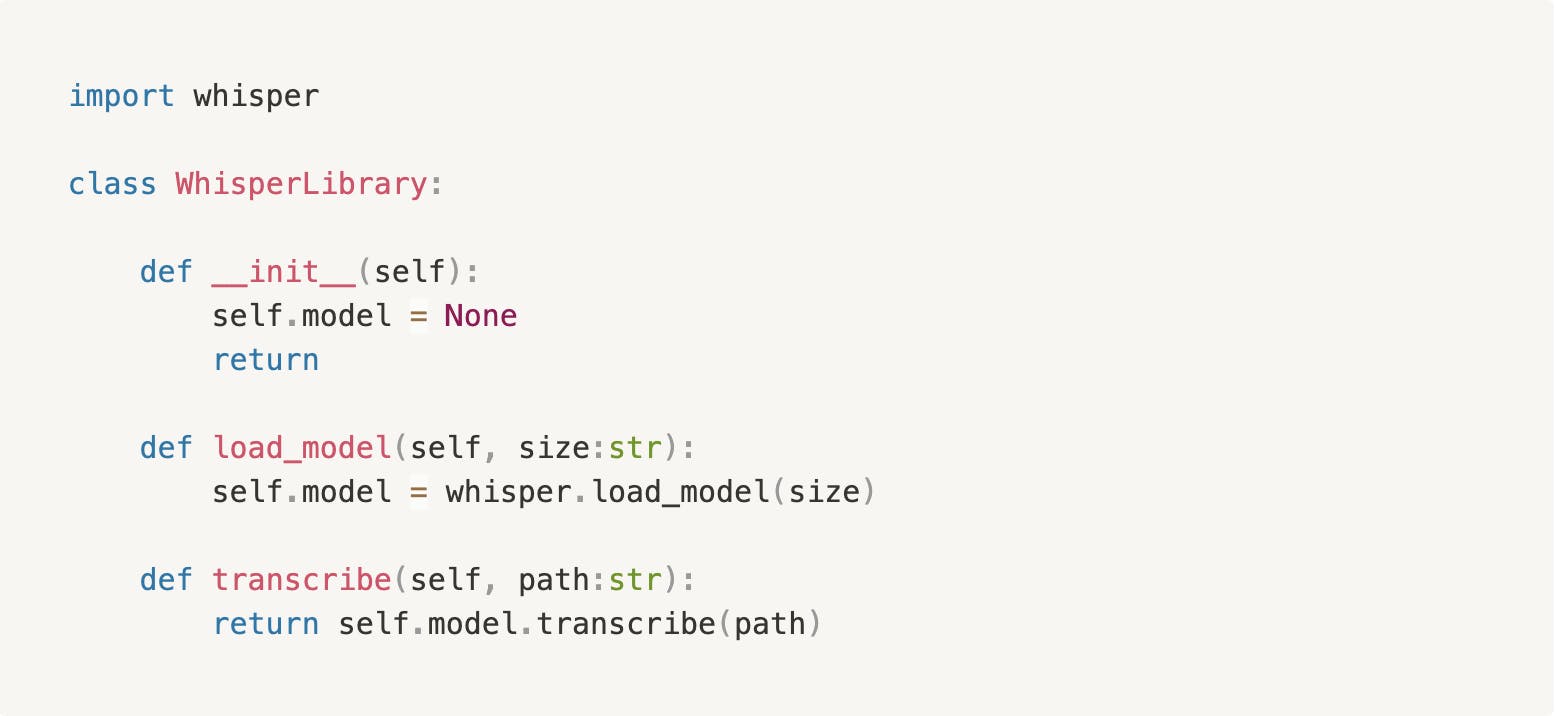

Wrapper for the Python library

Next up, let’s create a simple wrapper for the whisper Python library under the filename WhisperLibrary.py. Strictly speaking this would not be necessary, and you could as well use the library directly in the robot code. However, especially with more complex libraries using the wrapper will keep your robot code neat and easily readable.

Building the robot

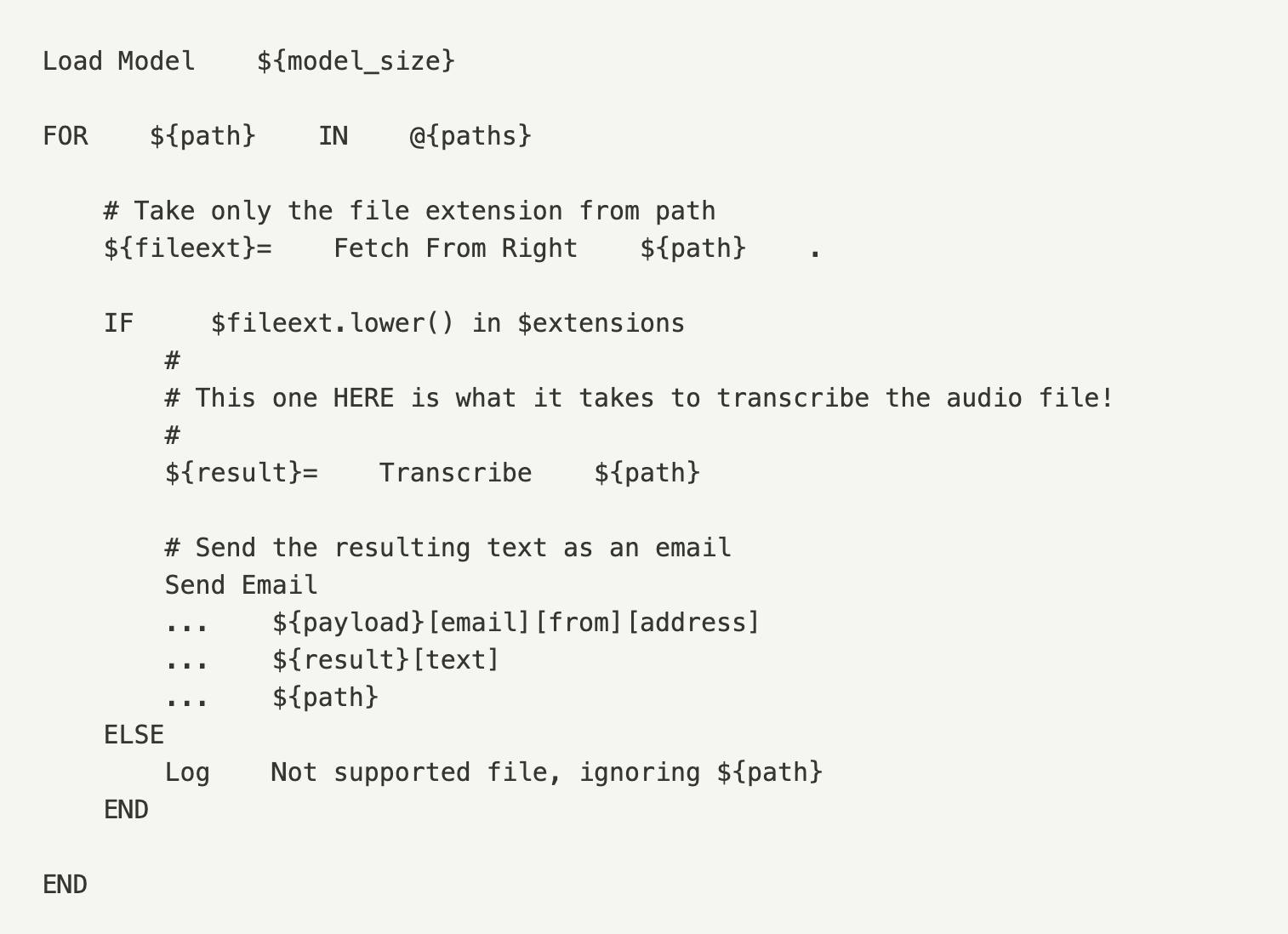

This robot is built to be triggered with an email to the robot’s address. The email becomes an input work item, the attachments are processed one by one, transcribed, and the resulting text is sent back to the original sender in the email. For those fully committed, the full repository is available here.

The interesting part is the FOR loop that goes through each of the attachments with a supported file extension:

Time to hit the run button to see if this flies!! 🤞 Follow my screen below and join the excitement.

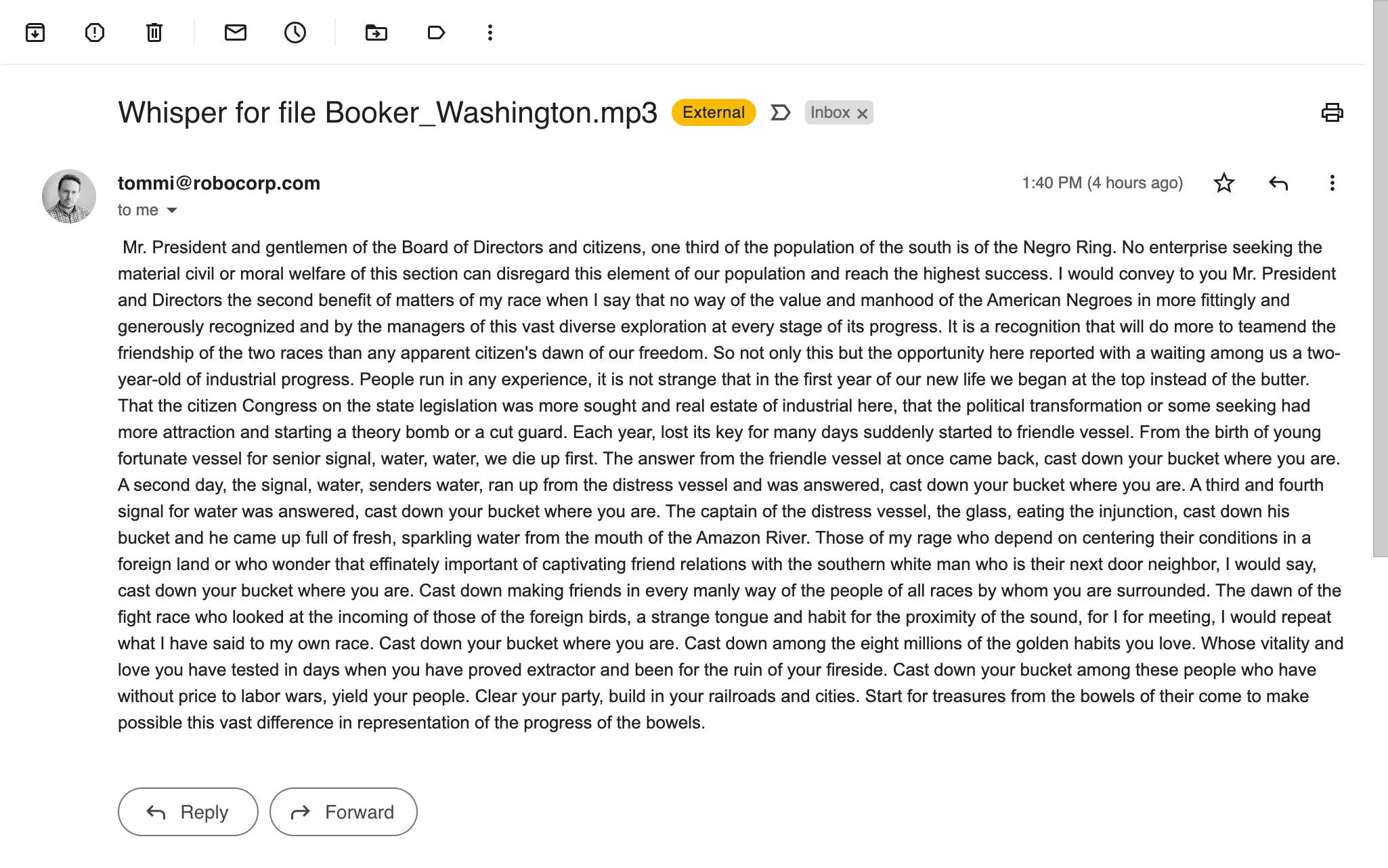

It indeed does (”at least on my machine”), and as a result, I am getting a surprisingly well transcribed Booker T. Washington speech in my email inbox! 🎊 With a quick look, you can only spot a few mistakes.

What about in real life?

While this was a fun experiment, using Whisper the way shown here would not be feasible in the production use cases. Just because the model is loaded every time makes the use very slow and resource-heavy - entirely unnecessary. The model should be exposed to the robot through an API.

For anyone looking to implement speech-to-text use cases within robots today, I recommend using one of the already existing keyword libraries that have proven similarly powerful. For example, Google Cloud Platform has an easy way to convert customer service voice calls into actionable text data with just a few keywords in your robot.